Many people, upon hearing “automated testing,” automatically think of unit tests. That’s understandable; after all, unit testing is one of the most well-known types of automated tests. However, it’s far from being the only one. We have UI testing, end-to-end testing, load testing, and that’s just to name a few.

Today’s post—as you can see from the “unit test vs. integration test” in its title—focuses on two types of automated tests and how they relate to one another.

Here’s the list of topics we’ll be covering:

- Defining unit testing

- Unit tests rules

- Why isolation is good for unit testing?

- Integration test

- Unit test vs. integration test: what’s the same, what’s different, when to use which

- Unit Test vs. Integration Test in the Context of White-Box and Black Box Testing

- What about the cost?

But that’s not all. Besides covering all of that, we’ll still talk about some adjacent topics. For instance, you’ll know how functional testing plays into the picture. You’ll also understand how unit and integration tests relate to white-box and black-box testing.

Let’s get started.

Defining Unit Testing

Unit testing is a type of automated testing meant to verify whether a small and isolated piece of the codebase—the so-called “unit”—behaves as the developer intended. An individual unit test—a “test case”—consists of an excerpt of code that exercises the production code in some way, and then verifies whether the result matches what was expected. So, unlike UI tests, unit tests don’t exercise the user interface at all; they’re supposed to interact directly with the underlying API.

Among all types of testing—manual or automated—unit testing is definitely a unique case. Most types of testing have the final user or stakeholder as the target audience, so to speak. These types of tests serve as “evidence” that the users’ requirements were met.

Unit tests, on the other hand, are different. Developers both write and read them, not with the final user in mind. Instead, developers write these kinds of tests as a check to see if the work they wrote does what they were aiming for. Having a comprehensive suite of unit tests in place allows developers to code more confidently because they feel protected by the safety net of tests.

Unit Tests Rules

Up until now, we’ve figured out some essential properties of unit tests. We express unit tests using code, unlike more visual types of tests. Also, developers are both their creators and main consumers, unlike other types of tests (such as acceptance testing) which target final users or stakeholders.

Now it’s time to learn the most important characteristic of unit tests, which really separates them from integration tests.

To do that, let’s see a famous definition for unit testing. This definition was proposed by Michael Feathers, and it goes like this:

A test is not a unit test if:

- It talks to the database

- It communicates across the network

- It touches the file system

- It can’t run at the same time as any of your other unit tests

- You have to do special things to your environment (such as editing config files) to run it

Why Is Isolation Good for Unit Tests?

When it comes to unit testing best practices, speed is the most important of them. Relying on things like the database or the file system slows tests down.

Then, we have determinism. A unit test must be deterministic. That is to say, if it’s failing, it has to continue to fail until someone changes the code under test. The opposite is also true: if a test is currently passing, it shouldn’t start failing without changes to the code it tests.

If a test relies on other tests or external dependencies, it might change its status for reasons others than a code change in the system under test.

Finally, we have the scope of the feedback. An ideal unit test covers a specific and small portion of the code. When that test fails, it’s almost guaranteed that the problem happened on that specific portion of the code. That is to say, a proper unit test is a fantastic tool for obtaining super precise feedback.

If, on the other hand, a test relies on the database, the file system, and another test, the problem could be in any one of those places.

Integration Test

To define integration testing, we’ll enlist the help of Michael Feathers again. He goes on to say:

Tests that do these things aren’t bad. Often they are worth writing, and they can be written in a unit test harness. However, it is important to be able to separate them from true unit tests so that we can keep a set of tests that we can run fast whenever we make our changes.

So, unit tests shouldn’t rely on that list of things because doing so would make them slow, non-deterministic, and harm their ability to provide precise feedback. However, we do need to test, somehow, code that interfaces with external dependencies. As you’ve just read, tests that do so aren’t bad, but worth writing.

What do we call this type of testing? You’ve guessed it: integration testing.

Unit Test vs. Integration Test: A Summary

Based on what we’ve just seen, we’re ready to summarize. What are the differences and similarities between the two approaches? When should you use which? Let’s get to it.

What’s the Same

Let’s start with what’s equal about the approaches. Both unit testing and integration testing are types of testing that require coding (in contrast to forms of testing that rely on screen recording, for instance.)

You can perform both types of testing using similar or even the same tools. Additionally, it’s recommended that you add both forms of testing to your CI/CD pipeline.

What’s Different

To summarize the differences between the two approaches, we’ll use a table;

| Unit Testing | Integration Testing |

|---|---|

| Unit tests mock interactions with external dependencies (database, IO, etc.) | Integration tests use the real dependencies |

| Developers write unit tests. | Testers or other QA professionals usually write integration tests |

| Unit tests provide precise feedback | Integration tests provide less precise feedback |

| It tests a unit without the need for other units to be complete | The units must be completed. |

| Developers run unit tests on their machines frequently. | Developers run integration tests on their machines less frequently, or not at all. |

| The CI server runs the unit test suite at each commit to the main branch or check-in. | The CI server might run integration tests less often, as they need a more involving setup process and are slower to run. |

| Unit tests are easier to write | Integration tests are harder to write. |

| Unit tests can be run in any order, or even simultaneously. | Integration tests usually required a strict order and can’t be run simultaneously. |

When to Use Which?

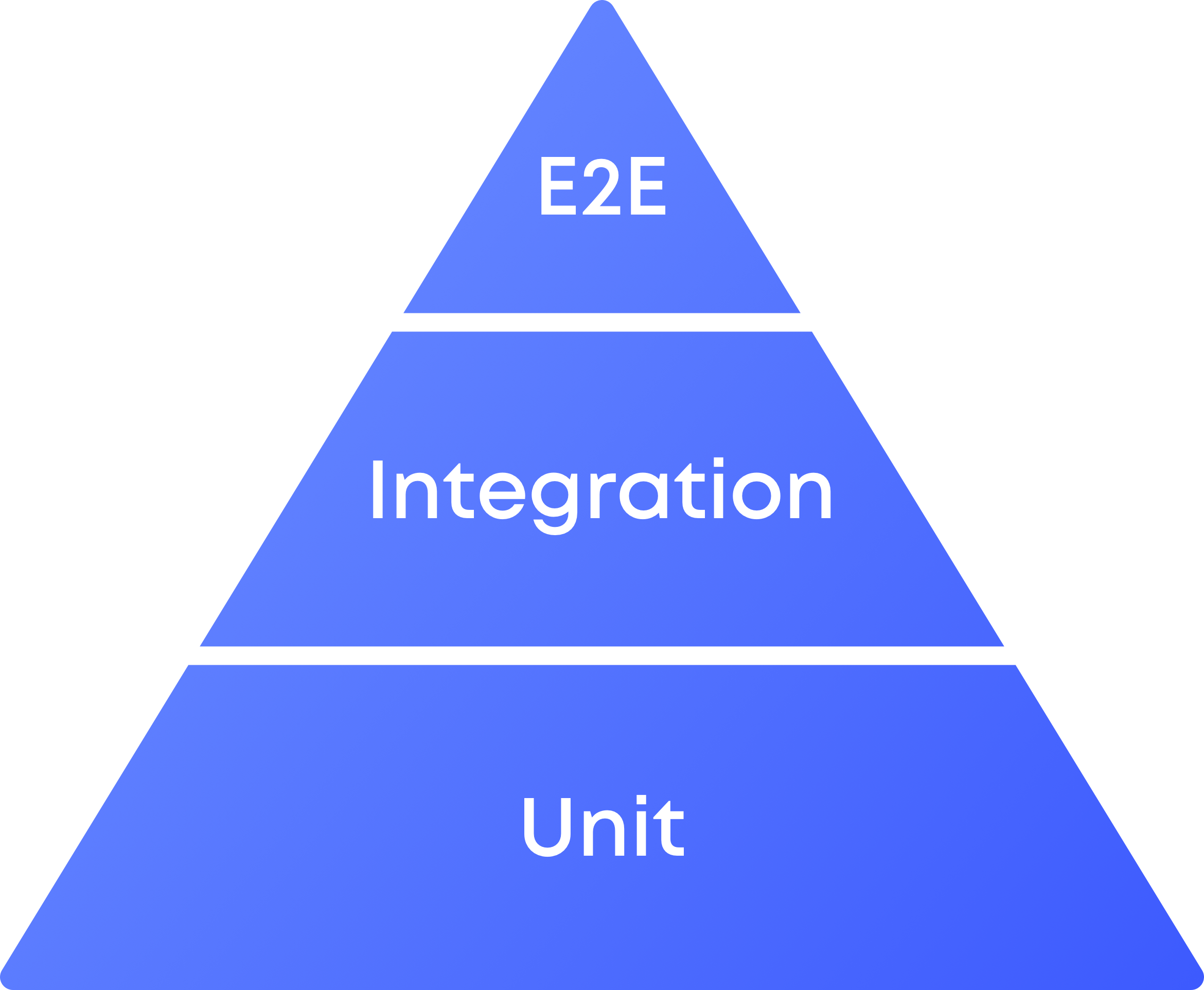

To understand when to use which approach, we must consider the concept called The Test Pyramid, which is a way of thinking about tests, showing that you should prioritize fast unit tests above other slower types of testing. Though there are many possible variations of this component, here’s a common depiction of it:

So, maximize the use of unit testing by applying it to portions of your codebase that deal with business and domain logic and don’t talk to external dependencies. Architect your application in such a way as to isolate the code that deals with external dependencies (IO). Also, you should strive to reduce the amount of such code. Then, you test it with integration testing.

What About Functional Testing?

I know the post’s title only mentions unit and integration tests, but I thought I’d briefly throw a third type of testing into the mix to spice things up. I’m talking about functional testing.

You can think of functional testing as being even higher level than both unit and integration tests. A functional test exercises a given feature of the application from the point of view of the user. Functional testing, like integration testing, requires the integration of different modules or layers in the application.

However, unlike integration tests, functional tests are supposed to test the application from a functional perspective—hence the name—which means it usually drives the application through its UI. A useful way to think about functional tests is to consider they are an intermediary between integration and end-to-end testing.

Unit Test vs. Integration Test in the Context of White-Box and Black Box Testing

White-box testing refers to types of software testing in which the internal structure or implementation of the application is known to the test. Black-box testing, on the other hand, is the exact opposite. That is, tests that fall into this category don’t know about the internals of the application.

Does this distinction really matter? Yes, and for a few reasons.

Why the Distinction Matters

Firstly, it matters in regards to who performs the testing. Types of software testing that fall into the black-box testing bucket tend to not require coding skills—e.g. UI testing or codeless end-to-end testing. The same goes for manual types of testing, such as manual exploratory testing—yes, manual testing is still an important part of a comprehensive testing strategy.

On the other hand, some of the classical examples of white-box testing—yes, unit testing is usually one of them—tend to be code-based tests. Knowing about the distinction helps organizations make educated decisions when deciding on the forms of testing that better suit their needs.

The white-box vs black-box discussion also matters when thinking about test robustness. Tests that rely too heavily on the internal implementation of systems might be too fragile, resulting in more laborious test maintenance.

Unit Test vs. Integration Test: Black, White, or Grey?

So, what about unit testing and integration testing? Are they white-box or black box? The correct answer would be neither, or both. Instead of black and white, the reality is more like shades of grey.

Unit Testing

Unit testing usually falls into the white-box bucket. Not only does it require coding skills, unit testing usually relies on the implementation of the code. However, there are situations in which unit testing can be considered black-box testing. It depends on the approach you use when writing the tests.

For instance, your team might practice TDD—test-driven development—using the approach known as Inside-out TDD. Also known as classical school, Chicago school, or even bottom-up TDD, this approach consists of developing an application driven by low-level unit tests. Then, you gradually work your way up toward higher-levels, where you can write tests at the acceptance level.

In this scenario, the unit tests would certainly be white-box tests, since they’d be very dependent on the code.

On the other hand, your or your team might favor the outside-in approach to TDD, also known as London School, mockist school, or top-down TDD. Using this approach, developers would start by writing higher-level unit tests, using test doubles to fill up the behaviors of dependencies that are yet to be written. Then, they’d gradually work their way in toward the internals of the application.

In this context, the higher-level unit tests at the beginning can be considered black-box testing, since they’re more concerned with the final API than with what the internal implementation looks like.

Integration Testing

With integration testing, we have a similar scenario. They can be both white-box or black-box.

Let’s say I write a React app that consumes the GitHub API. I could create a component that allows me to type in a username and see the repos that belong to that user. I’d like to test such a component, in a way that uses the real API. Would that be a black-box or white-box testing?

Well, from my perspective, the GitHub API is certainly a black-box. I don’t know how it works internally. I just rely on its public interface and expect it won’t change.

I could, however, write a test that integrates different parts of my application, in a way that relies on its internal structure. In such a scenario, I could say my tests are white-box tests.

What About the Cost?

An important factor we can’t overlook when thinking about software testing is how much does it cost. It’s essential to discuss and measure the ROI of test automation.

Generally speaking, unit tests are cheaper. They’re easier to write—unless you’re trying to add them to an existing app—which means developers don’t spend quite as much time writing them. They’re also cheaper to run: they don’t usually require you to do special things for your environment or obtain external resources.

When it comes to speed, unit tests run faster, because they don’t rely on slow, external resources. Also, since they’re completely isolated, you can run them in parallel, saving even more time.

All of that doesn’t mean integration tests are “bad” and that you shouldn’t use them. Quite the opposite, in fact. Since they provide a different kind of feedback, they’re equally as important as unit tests.

However, since they have a higher cost, you should be strategic about when to leverage them. The already mentioned testing pyramid is a good model to have in mind when making decisions about the type of tests to prioritize.

Unit Test vs. Integration Test? No, Unit Test AND Integration Test

There are many types of automated testing. Every one of them has its place since each one fulfills a specific need. Unit testing and integration testing are no exception.

In this post, you’ve learned the similarities and differences of these two automated testing techniques, understanding that one’s strength is the other’s weakness, and vice-versa. Unit testing offers developers a safety net in the form of fast, deterministic tests with incredible precise feedback.

On the other hand, integration tests can go where unit tests can’t, by using real dependencies, which allows them to test scenarios that more closely resembles the final user’s experience.

As it turns out, the “vs.” is out of place in the post title. The two approaches don’t compete against each other: they complement each other, and you need both.